There’s a lot of talk about trusting AI these days and rightly so. We’re putting these systems into decisions that matter: healthcare, hiring, pricing, customer service, even policing. When something goes wrong, we ask: Can I really trust AI to get this right?

But underneath that question is a tougher one: Do I trust anything to get this right?

The Myth of Perfection in Work

Let’s start with the obvious. AI is not infallible. It can make mistakes. Sometimes the mistakes are silly ones, sometimes harmful ones. It can misinterpret context, reinforce bias, hallucinate facts, or optimize for the wrong thing entirely. And when it makes those mistakes, it tends to do so with confidence and speed. That’s unnerving.

But before we give up on AI entirely, it’s worth looking at the baseline we’re comparing it to: human decision-making. Because humans make mistakes too. Lots of them. We misremember. We generalize from too few examples. We have cognitive biases – confirmation bias, recency bias, anchoring, you name it. We get tired, stressed, distracted. Sometimes we let ego get in the way. Sometimes we act on gut instinct that’s not as reliable as we think.

In most workplaces today, or at least until very recently, we’ve built processes to catch and correct human error. A nurse double-checks a dosage. A peer reviewer challenges an assumption in a report. A manager spots a pattern that doesn’t quite add up. These checks aren’t perfect, but they’re familiar. They give us a sense of control.

When AI enters the picture, the failure modes shift. You might not get a gut feel that something’s off. You might not even know what question to ask. Mistakes can look different, more alien, or more subtle. That’s where trust can break down. However, just like with humans, we need to include processes to catch and correct errors.

How do we handle it?

Some of it looks like what we already do with humans: we review decisions, we look at outcomes, we ask for justification. We build systems that let people intervene. But AI also opens new doors. We can log every step of its reasoning. We can rerun scenarios with different variables. We can even use AI to audit other AI through techniques like evaluation, testing, or reflection (more on that in another article).

The point isn’t to say “AI is better” or “AI is worse.” It’s to recognize that all decision-making, human or machine, comes with risk. What matters is how visible those risks are, how we handle them, and how we learn from them.

Trust doesn’t mean blind faith. It means understanding the kinds of mistakes that are likely to happen, having a plan to catch and correct them, and being transparent about what’s working and what’s not.

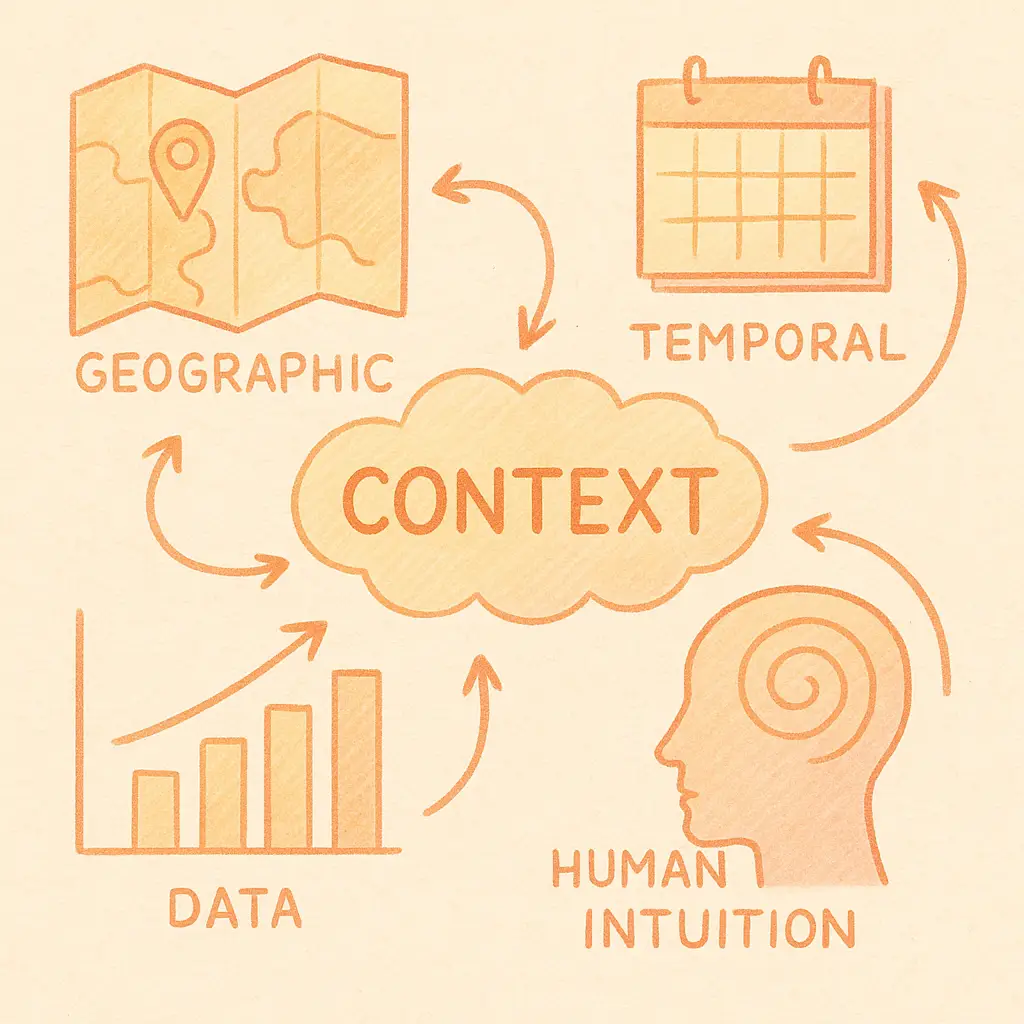

At Bordo, we do not assume trust is given. We believe it is earned. We see AI not as a replacement for human decision-making, but as part of a larger system, one that includes people, context, data, and feedback loops. That system has to be reviewable, resilient, and transparent enough to earn real trust. That’s the standard we’re building toward.