People who have consumed any form of media over the past few years will have heard the veritable mountain of issues with artificial intelligence (AI). Though not a new technology, the gusto and hype with which large language models (LLMs) leapt into the mainstream over the past year caused an inevitable flurry of cynicism (a.k.a. Gartner’s Trough of Disillusionment).

If we take these criticisms from a design thinking and user experience lens, we may come to realize we’re at a crossroads when it comes to designing this now-popularized technology in ways that will truly serve users.

As an example of this philosophy, in the paper Design in the Age of Artificial Intelligence, Roberto Verganti reminds us of how crucial user experience (UX) is when bringing new technologies to market: “Rather than being driven by the advancements of technology and by what is possible, design-driven innovation stems from understanding a problem from the user perspective, and from making predictions about what could be meaningful…” This design-driven innovation process can ensure we’re building towards delightful experiences instead of the horror stories often caught in headlines.

Let’s explore some possible ways empathy and user-centered design show up in AI product design.

Ability to Explain Decision Making

The first way AI products can be designed with users in mind is to empathize with a user’s desire to know how decisions are made. This is especially true in complex domains, where explainability can be central to usability and delight. In many use cases, a black-box output is simply not a sufficient basis on which to make decisions. This is particularly true when that decision impacts others or has downstream consequences.

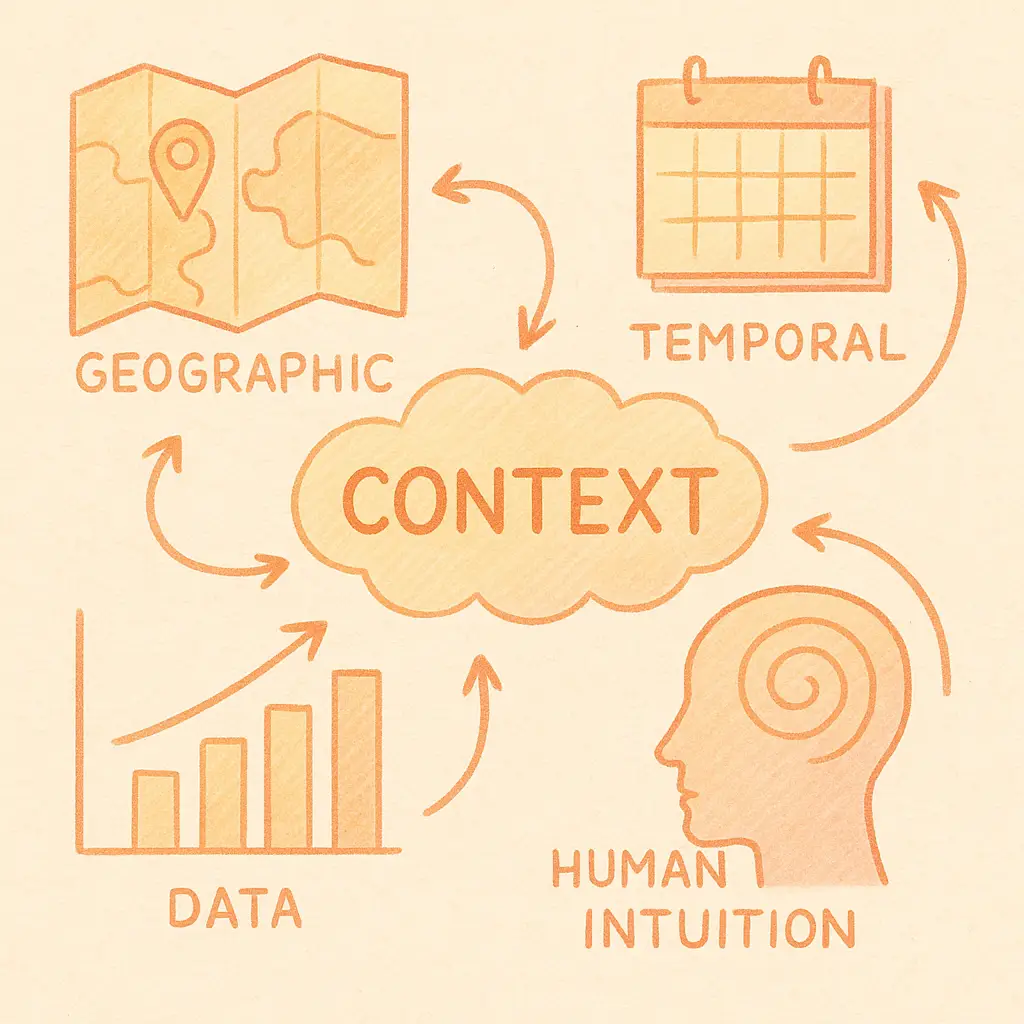

For example, one can look at the medical insurance industry and see the consequences of using black-box AI to reject certain medical claims automatically. This AI auto-rejection or claims review process may also confuse internal company users, who may not understand why a claim was rejected. Even for systems that do not auto-reject claims but instead flag claims, oftentimes, there is no explanation for why a claim or provider was flagged. This is a case where empathy for users and end-users should dictate the necessity of baked-in explainability. We should also recognize that explainability may not always be enough, and in cases where that’s true, provide context-aware AI in which a user can more easily examine the circumstances around a decision when necessary.

Human+AI Collaboration

A second way we can design AI products with greater user empathy is to involve the user in co-creating the outputs they need. In this model, the AI product becomes an assistant to the user instead of the other way around.

An example of this type of involvement is user feedback loops, where a user can review a system’s output and provide feedback on the quality, correctness or accuracy of the output in real-time. This could potentially raise early red flags in cases where large language models experience model hallucination and even “snowballing.”

Involving the user in co-creation could also show up as reimagining how a user interacts with outputs, where complex analysis is made more accessible to users of differing technical expertise.

AI Where Users Need It

Part and parcel of the idea of involving the user is the assumption that user research is conducted and AI is being applied to the right use cases. This brings us to a final way we can empathize with users as we develop AI products: to recognize that AI should be thought of as augmented intelligence.

Augmented intelligence means building towards a future where AI can complement users’ abilities and help them grow in areas where they need additional support. Shifting to a user-centric perspective means empathizing with users’ fears and feelings about AI. This entails finding creative ways to design AI methods and applications to rise above the Trough of Disillusionment into delightful experiences designed for humans above all else.

Our Commitment to Human-Centered AI

At Bordo, we believe that AI should be built not just with technological prowess, but with deep empathy for the people who use it. That’s why we approach every AI solution through the lens of user experience, transparency, and collaboration. Whether we’re designing explainable systems, creating intuitive interfaces, or embedding user feedback into the development cycle, our goal is to craft AI tools that empower rather than alienate. As the AI landscape continues to evolve, we remain committed to designing with humans at the center because we know that meaningful innovation starts with understanding.