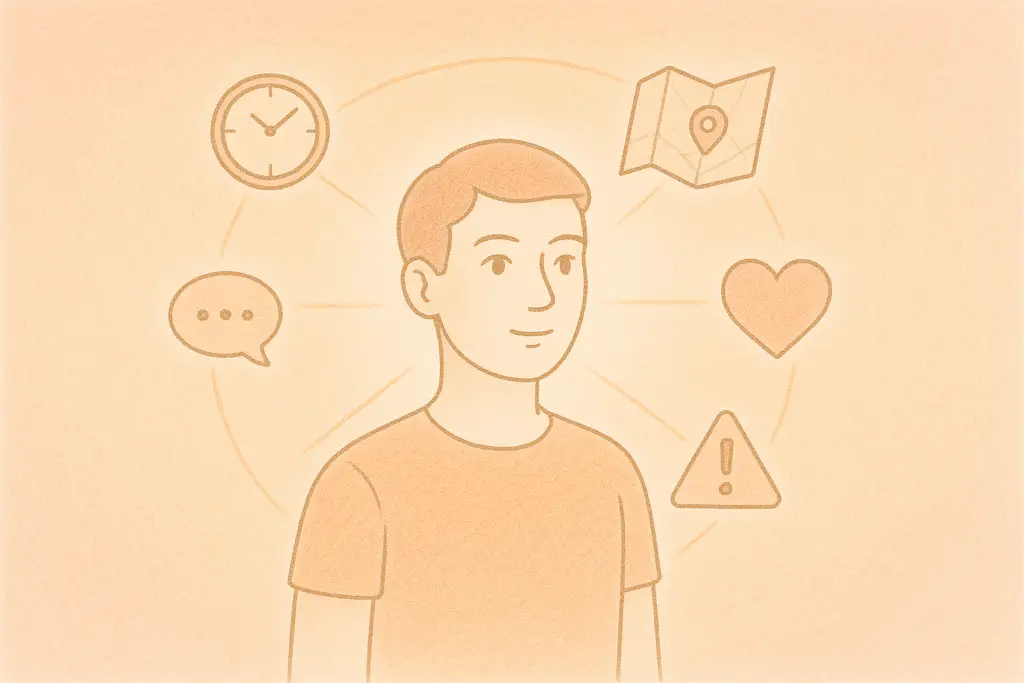

When decision-makers rely on large language models (LLMs) or other AI systems, they’re not just adopting a tool. They’re also accepting a degree of risk that comes from delegating judgement to a system they didn’t build and may not fully understand. Trusting the output of an LLM means having confidence that not only is it factually accurate, but that it reflects the right assumptions, context, and constraints. Hallucinations (i.e. situations where the model confidently outputs false or misleading information) undermine this trust and can lead to costly mistakes. Many tools even have warnings to check important info because of this.

Without visibility into how an answer was formed, what data it was based on, or which assumptions were made, leaders may act on flawed information without realizing it. The result is a kind of blind delegation, which can be incredibly dangerous in environments which require sensitive or high-risk decisions to be made. Because of this, traceability, transparency, observability, and understandability are all essential for anyone using an AI system to support or automate decision-making.

Elements We Believe Foster Trust

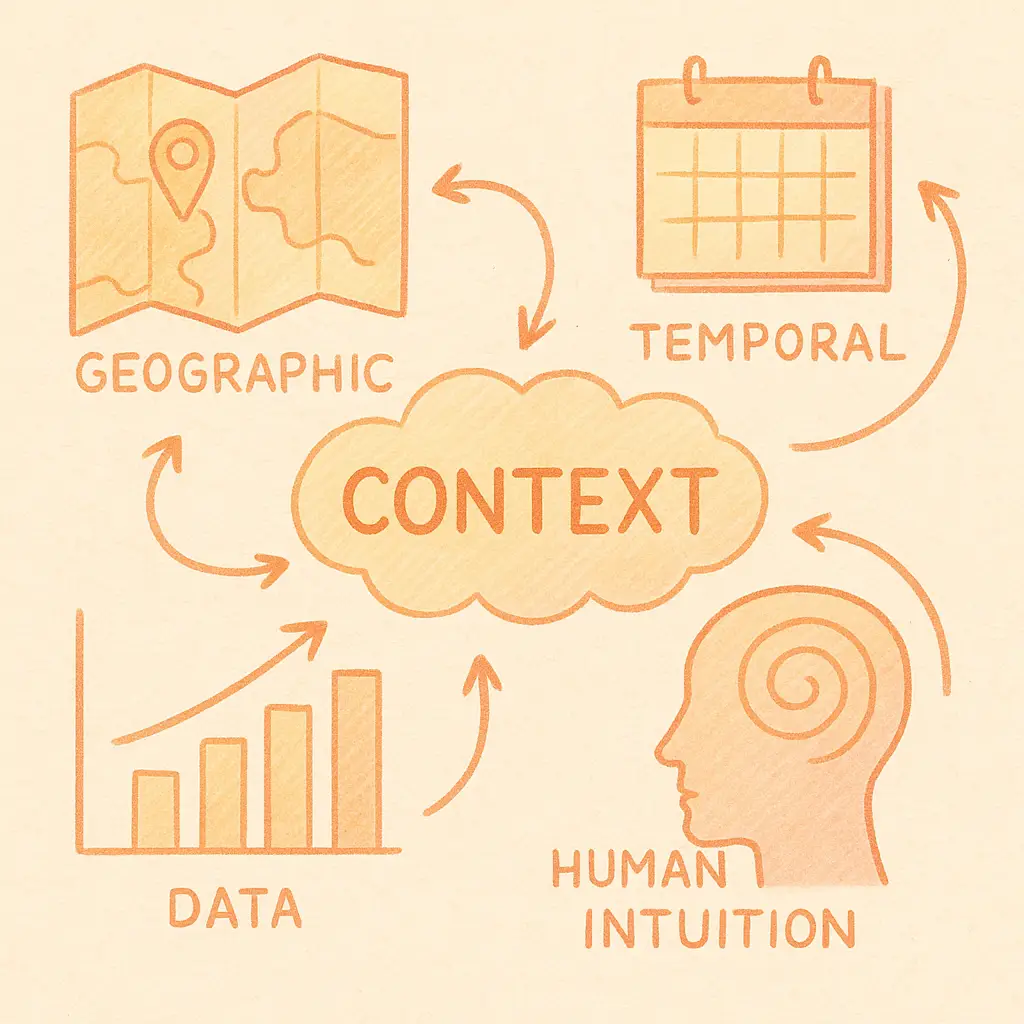

Traceability — Can we follow the path back?

This is about lineage. Where did the data come from? What models, rules, or inputs were used? What decisions were made, and in what order? This is helpful for use in audits, debugging, and accountability. It helps answer: “If we had to explain this later, could we?”

Think: logs, decision history, inputs and outputs.

Transparency — Are we willing to show what matters?

This is about exposure with intent. Instead of “the information is available somewhere,” we expose the right information surfaced in the right way to help someone understand what’s going on to build trust and align with expectations.

Think: what users (internal or external) are shown—and why.

Observability — Can we monitor what the system is doing in real time?

This is about system awareness. It answers: Is the system behaving as expected? Is anything broken, drifting, or off course? Observability is more about signals and metrics than specific decisions. Can we show whether the system is healthy and doing its job?

Think: metrics, alerts, dashboards, feedback loops.

Understandability — Can people actually make sense of it?

This is the human layer. You can have all the traceability and transparency in the world, but if the person on the other end doesn’t understand what they’re seeing (or what to do with it), it’s all noise. Can someone make informed decisions based on what we’re showing them?

Think: narrative, explanation, mental models, user empathy.

How We Implement This at Bordo

As we build our platform, we keep the following in mind:

- Transparency should appear when and where it’s relevant, not as a separate feature to hunt for.

- Transparency should be discoverable, but not disruptive.

- Explanations should start simple, with the ability to drill down (progressive disclosure).

- The system should match the explanation style to the user’s role and intent.

- No user should see more data than their role reasonably calls for, but explanations should still feel complete within their scope.

- Every decision should be explainable in human terms, not only via logs or code tracebacks.

For any decision the system makes, we ask:

- What did the system know when it acted? (and how is it connected to this situation?)

- What decision was made? (and can we explain why simply?)

- Who is seeing it? (and are we respecting their level of access and comprehension?)

Our goal at Bordo is to provide quality results that can be trusted and validated, and to share what you need when you need it.