Everyone’s talking about the latest models, the biggest context windows, the newest breakthroughs. But here’s what rarely gets mentioned: many AI implementations fail not because the technology isn’t good enough, but instead because they don’t understand context.

That word context is doing a lot of work here. While there are many forms it can take, in this article we’ll touch on these two types: context windows and context as situational awareness.

“Context” in Large Language Models

In the world of Large Language Models (LLMs), the word context is often used to mean context windows. A “context window” refers to how much information an AI can consider at once. Think of it as working memory for machines in a given interaction. Early LLMs could only handle a few pages of text at once, limiting their ability to work with extended information. Today’s models, like Google’s Gemini, can process entire document sets or multiple documents simultaneously, thanks to much larger context windows. This means they can incorporate more background and continuous information in a single analysis, leading to more coherent outputs. However, while this technical capacity is impressive, it’s not the only type of context we’ll focus on here.

Context as Situational Awareness

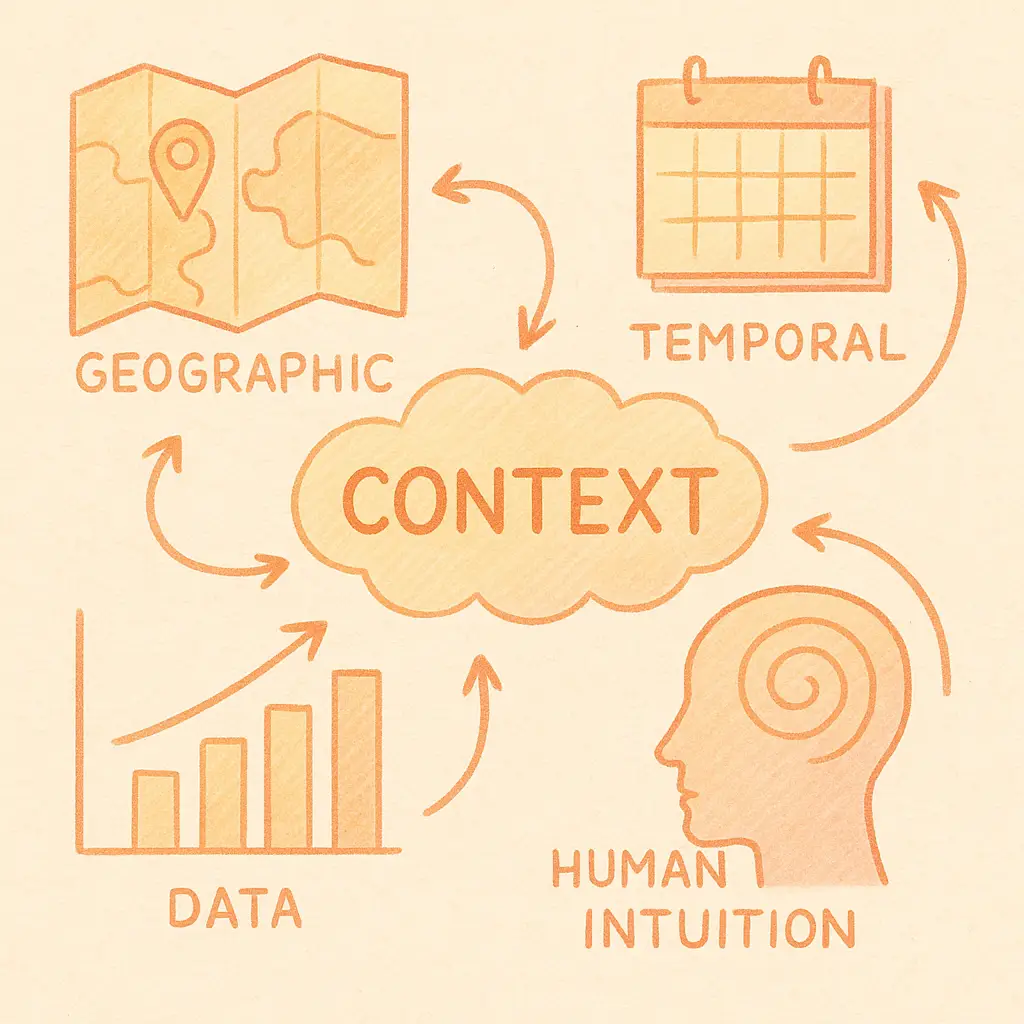

In contrast to the technical memory of models, context as situational awareness refers to the surrounding circumstances or information that give meaning to a piece of data. It’s the “who, what, when, where, and why” that gives clarity to the “what.” Without this, information can be ambiguous or misleading. It provides the richness and specificity that allows for accurate interpretation and effective action. It’s why human communication is so robust; we implicitly understand and utilize vast amounts of context in every interaction.

Where AI Fails Without Context, Humans Struggle to Scale It

Context is the underlying force that determines whether your AI delivers real business value or becomes another expensive experiment gathering digital dust. You wouldn’t trust a consultant’s recommendations who ignored your company history, market position, and current challenges. Yet that’s exactly what many AI implementations do; they operate in a vacuum, disconnected from the real-world context that makes decisions meaningful.

Whether it’s an executive or an experienced analyst, decisions aren’t solely based on numbers. Instead, they consider a rich set of factors such as market conditions, seasonal patterns, competitive moves, and organizational history. They synthesize information from multiple sources and apply nuanced understanding developed over years. Context shows up everywhere in your business, and gathering, understanding, and effectively using it is critical. Unfortunately, this is also where human-driven processes often break down. Teams spend significant time manually chasing down this context. As a result, your domain experts, the people hired to solve complex problems, get stuck piecing together information from spreadsheets, documents, and databases instead of thinking strategically. This operational drag is costly and inefficient.

Context-aware AI systems can relieve this burden by gathering and factoring in relevant context at scale, with speed and consistency, effectively augmenting your experts’ capabilities. As AI systems become more sophisticated, mirroring this human ability to grasp context is critical for them to perform complex tasks effectively and reliably. The best AI implementations don’t replace human judgment but instead amplify it by handling the contextual heavy lifting.

The Intersection of Model Memory and Meaning

The failure we mentioned earlier (failure due to lack of understanding context) often happens at the point where two types of context are supposed to come together, but don’t. On one side, there’s the model’s context window – what it can “see” or remember at any given time. On the other, there’s the broader situational context – the business realities, history, constraints, and nuance that shape what a good decision actually looks like. When these two are disconnected, even the most advanced models can miss the mark. Closing this gap requires a deliberate approach, one that ensures the right context is provided at the right time. Enter “context engineering.”

Context engineering is the act of systematically providing AI systems with the right contextual information to provide higher quality outputs. As Andrej Karpathy puts it: “In every industrial-strength LLM app, context engineering is the delicate art and science of filling the context window with just the right information for the next step.”

Context engineering involves:

- Smart information architecture: Organizing your enterprise knowledge so AI can find what’s relevant

- Dynamic context assembly: Automatically pulling together pertinent information based on the specific query

- Context prioritization: Determining which contextual elements matter most for different decisions

- Real-time context management: Keeping your workflow or session current with the right information

Context engineering represents the evolution of enterprise AI from experimental technology to operational capability. The focus is on designing AI systems that can holistically understand the institutional and situational knowledge as well as your best people do.

Closing the Context Gap

The future of AI in business won’t be defined by model size alone. It will be defined by how well we bridge the gap between what machines can technically process and what decisions actually require. That means designing systems that don’t just store more information, but understand what matters, when it matters.

At Bordo, we make sure context engineering is an integral part of every AI solution we build. By thoughtfully organizing and delivering the right context to AI systems, we help ensure they augment human expertise effectively by turning data into actionable insights that drive real business value.

Because the question isn’t: can your AI process information?

The real question is: does it understand your world well enough to help you make better decisions?